Three Levels of AI Auditing

November 29, 2022The phrase hook, line, and sinker usually refers to fooling or deceiving someone, but I don’t think it has to. It can also just mean convincing someone thoroughly. The question of what an AI audit should precisely be, especially auditing for equity, is receiving greater attention. In my mind, there are three levels of AI auditing, just like the hook, line, and sinker. The hook is a journalistic approach with an individual attention-grabbing, persuasive narrative example that is easy to grasp, but may or may not reveal a systemic problem. The second level is an outside-in study done of a system that may reveal a pattern, and it might not even be exactly related to the first level hook. The third level of auditing requires access to the internals of the system and could be quite detailed. The decision maker doesn’t even have to be AI; human decision making can be audited in the same ways.

Let’s look at a few examples to get a better sense of what I mean.

Gender Shades

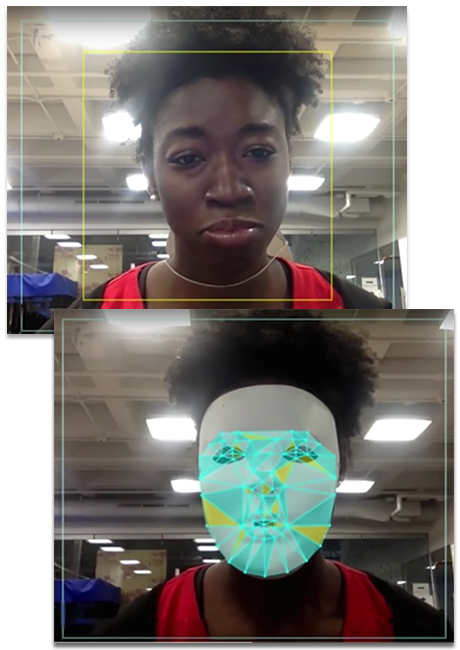

The first-level audit in Joy Buolamwini’s Gender Shades work was her using a white mask to show how a face tracking algorithm didn’t work well on dark-skinned faces.

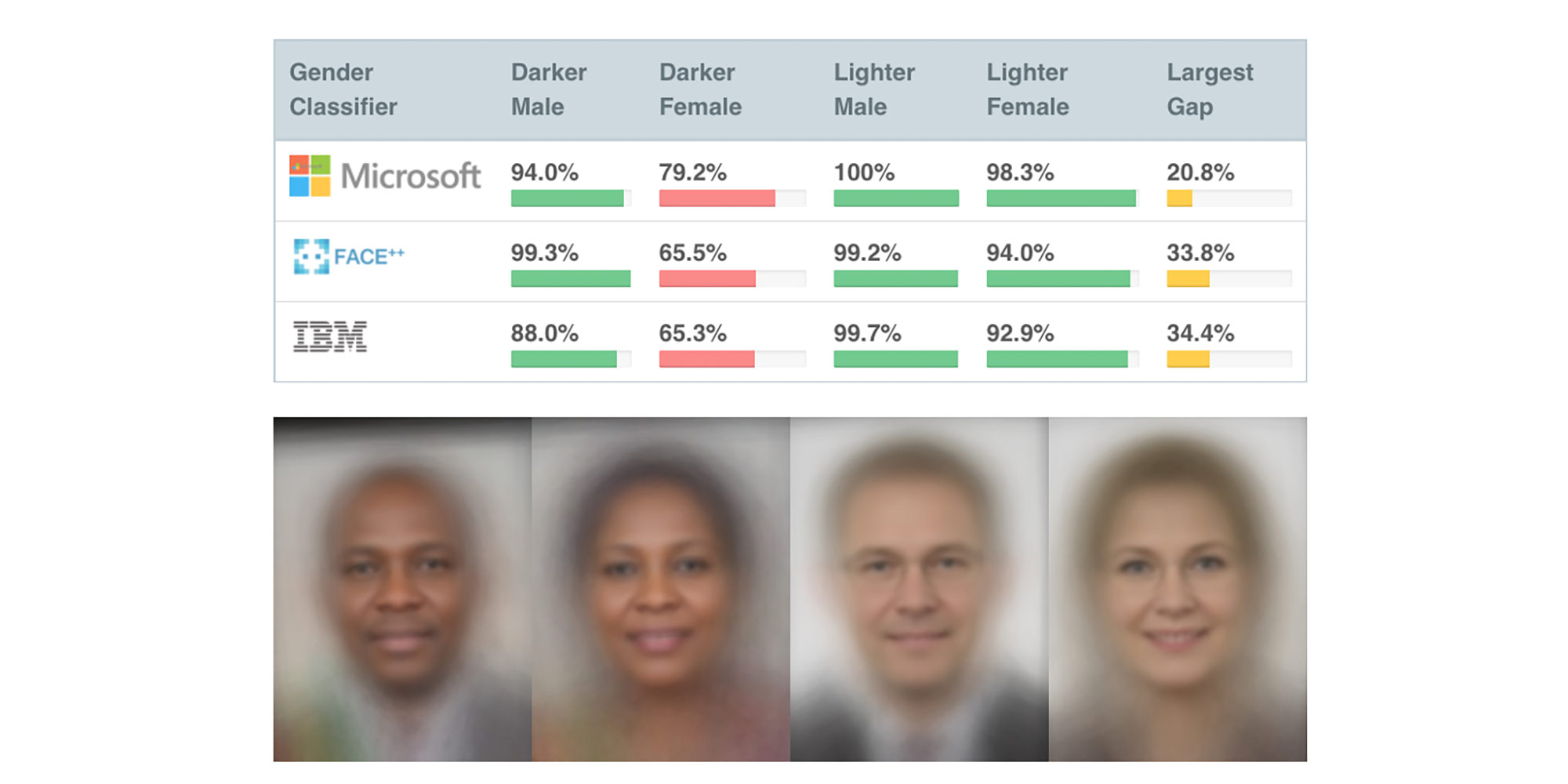

Her second-level audit was creating the small Pilot Parliaments Benchmark dataset and using it to report intersectional differences in the gender classification task of commercial face attribute classification APIs.

She didn’t have access to the internals, but with access to some of the embedding spaces used by the models, we did a third-level analysis. We found that skin type and hair length are unlikely to be contributing factors to disparities, but it is more likely that there is some mismatch between how dark-skinned female celebrities present themselves and how dark-skinned female non-celebrities and politicians do, especially in terms of cosmetics.

Apple Card

The discovery of gender bias in the Apple Card began with a single example reported in a tweet, and it hooked a lot of people.

Enough so that the New York State Department of Financial Services launched a detailed third-level investigation, eventually exonerating Apple Card and Goldman Sachs, the financial firm that backed it.

COMPAS

ProPublica understood these levels as they disseminated the findings of their famous study on the COMPAS algorithm for predicting criminal recidivism. The main article included both the hook of individual stories, like those of Bernard Parker and Dylan Fugett, as well as statistical analysis of a large dataset from Broward County, Florida. The more detailed articles of the first level and second level were published alongside.

Northpointe (now Equivant), the maker of COMPAS did their own analysis to refute ProPublica’s analysis on the same data, so still second-level. (The argument hinged on different definitions of fairness.) The Wisconsin supreme court ruled that COMPAS can continue to be used, but under guardrails. I don’t think there has ever been a third-level analysis that breaks open the proprietary nature of the algorithm.

Asylee Hearings

Reuters did the same thing as ProPublica in a story about human (not AI) judgements in asylum cases. A first-level part of the story focuses on two women: Sandra Gutierrez and Ana, who have very similar stories of seeking asylum, but were granted and not granted asylum by different judges. A second-level part of the story focuses on the broader pattern across many judges and a large dataset.

Given that all of the data is public, Raman et al. did a third-level in-depth study on the same issue. They found that partisanship and individual variability among judges have a strong role to play in the decisions, without even considering the merits of a case. This is a new study. It will be interesting to track what happens because of it.

Others

There are many other examples of first-level audits (e.g. an illustration of an object classifier labeling black people as gorillas, a super-resolution algorithm making Barack Obama white, differences in media depictions of Megan Markle and Kate Middleton, language translations from English to Turkish to English showing gender bias, and gender bias in images generated from text prompts). They sometimes lead to second- and third-level audits (e.g. image cropping algorithms that prioritize white people and disparities in internet speed), but often they do not.

So What?

Each of the three levels of audits have a role to play in raising awareness and drawing attention, hypothesizing a pattern, and proving it. To the best of my knowledge, no one has laid out these different levels in this way, but it is important to make the distinction because they lead to different goals, different kinds of analysis, different parties and access involved, and so on. As the field of AI auditing gets more standardized and entrenched, we need to be much more precise in what we’re doing — and only then will we achieve the change we want to see, hook, line, and sinker.

[…] The Ultimate Machinists « Three Levels of AI Auditing […]